Basic Performance Optimization

April 27, 2019Introduction

I’m working on a Bitcoin library on my own time, and as I work through it I want its performance characteristics to be good, so I’m going to do some profiling along the way and look for low-hanging fruits. I thought I’d share my first stab at this.

For context, what I’m working on today is a Base58-encoding module in F♯. I wrote it while learning how Base58 works and how it’s used in Bitcoin, and performance wasn’t at the forefront of my mind. Now that I have a working module, I decided to come back and profile it to see what could be improved.

To start, let’s see the code:

namespace BitThicket.Bitcoin

type Base58Error =

| InvalidBase58String of string

type Base58CheckError =

| IncorrectChecksum of byte array

type EncodingError =

| UnsupportedEncoding of string

| InvalidEncoding of string

| Base58Error of Base58Error

| Base58CheckError of Base58CheckError

module internal Base58 =

open System

open System.IO

open System.Text

open BitThicket.Bitcoin

type Base58String = Base58String of string

let private _encTable =

[| '1'; '2'; '3'; '4'; '5'; '6'; '7'; '8'; '9'; 'A'; // 0-9

'B'; 'C'; 'D'; 'E'; 'F'; 'G'; 'H'; 'J'; 'K'; 'L'; // 10-19

'M'; 'N'; 'P'; 'Q'; 'R'; 'S'; 'T'; 'U'; 'V'; 'W'; // 20-29

'X'; 'Y'; 'Z'; 'a'; 'b'; 'c'; 'd'; 'e'; 'f'; 'g'; // 30-39

'h'; 'i'; 'j'; 'k'; 'm'; 'n'; 'o'; 'p'; 'q'; 'r'; // 40-49

's'; 't'; 'u'; 'v'; 'w'; 'x'; 'y'; 'z' |] // 50-57

let private _validate raw =

if String.forall

(fun c -> Array.exists (fun e -> c = e) _encTable) raw

then Base58String raw |> Ok

else InvalidBase58String raw |> Error

let validate raw = _validate raw |> Result.mapError Base58Error

let private divrem n d =

let rem : bigint ref = ref 0I

let next = bigint.DivRem(n, d, rem)

(next, !rem)

let rec private _encode (acc:MemoryStream) n =

if n = 0I then

Array.foldBack

(fun b (sb:StringBuilder) ->

sb.Append(_encTable.[int b]))

(acc.ToArray())

(StringBuilder())

|> (fun sb -> sb.ToString())

else

let rem : bigint ref = ref 0I

let next = bigint.DivRem(n, 58I, rem)

acc.WriteByte(!rem |> byte)

_encode acc next

// this could definitely benefit from Span work

// expects input array to be in big-endian order (higher-order

// bytes precede lower-order ones - i.e., data[0] is the MSB)

let encode (data:byte array) =

use ms = new MemoryStream()

data

|> Array.append [|0uy|]

|> Array.rev

|> bigint

|> _encode ms

let rec private _decode (s:string) (acc:bigint) =

if s.Length = 0 then Bits.Converter.GetBytesBE(acc)

else Array.findIndex (fun c -> c = s.[0]) _encTable

|> (fun idx -> 58I * acc + bigint idx)

|> _decode (s.Substring(1))

let decode (data:string) =

validate data

// this is tricky because when BigInteger.ToByteArray()

// is called, it will insert a leading zero byte if

// necessary to ensure a positive value when the array

// is round-tripped.

|> Result.bind (fun (Base58String encoding) ->

_decode encoding 0I

|> if data.[0] = '1'

then Bits.ensureZeroMsByte

else Bits.removeZeroMsBytes

|> Ok)This pre-optimized code is avalable on GitLab.

Just at a glance, we can spot some things that look likely to be costly. For example, the line highlighted below stands out as a potentially expensive operation.

let rec private _decode (s:string) (acc:bigint) =

if s.Length = 0 then Bits.Converter.GetBytesBE(acc)

else Array.findIndex (fun c -> c = s.[0]) _encTable

|> (fun idx -> 58I * acc + bigint idx)

|> _decode (s.Substring(1))For each character in the string * each character in the encoding table, we are allocating several System.Numerics.BigInteger objects. It’s easy to get ahead of ourselves though - we don’t want to go much further than this initial intuition without measurements to guide us, and this is where a profiler becomes a necessary tool. I have some Expecto tests written for this library, and luckily for us, Expecto has a mode

of test-running that allows the user to specify a number of minutes to “stress”

the test (just running repeatedly for a duration of time). We’ll use that to let

the profiler get measurements. After running the test suite for this module for

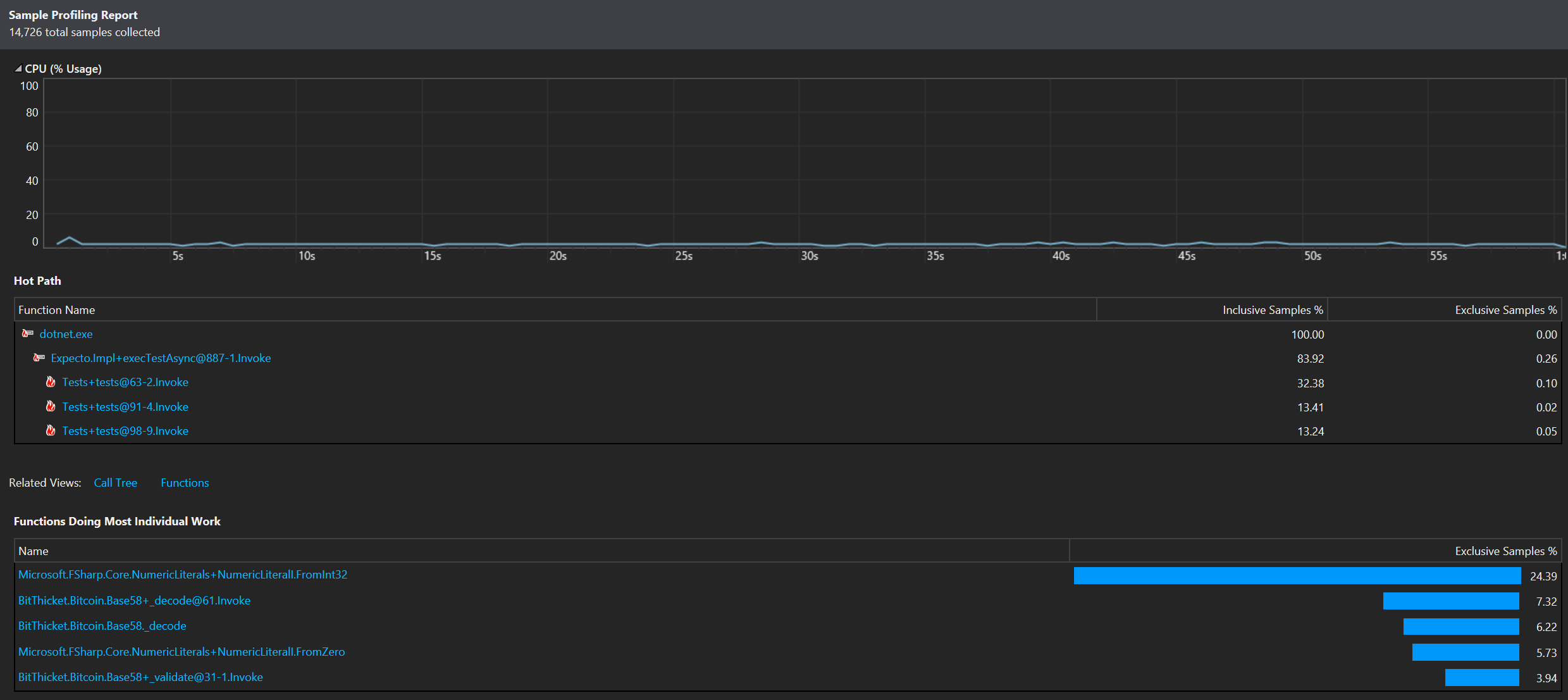

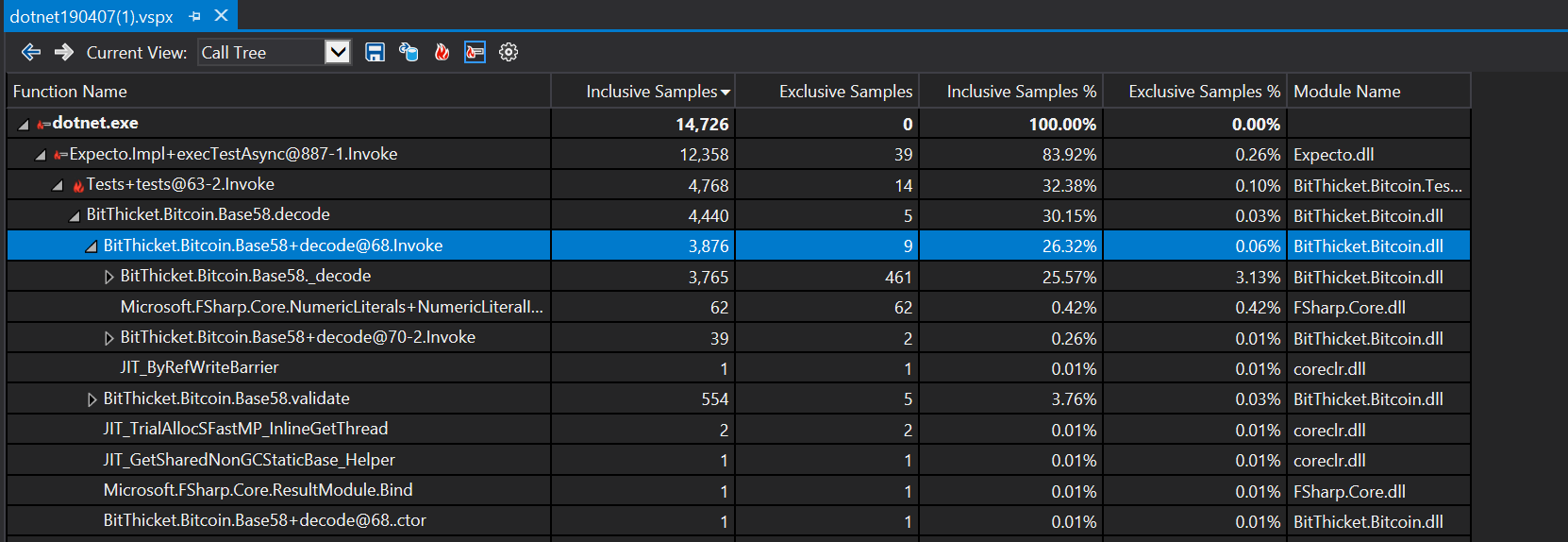

1 minute under the profiler, these are the results:

If you click on the image to zoom in, you should be able to see the results. I was

expecting the base58 functions to be on top because that’s what we’re profiling here, but

instead I was surprised to see the large amount of time spent in

Microsoft.FSharp.Core.NumericLiterals+NumericLiteral1.FromInt32.

If you’re unfamiliar with this function (as I was), the MSDN documentation will be helpful.

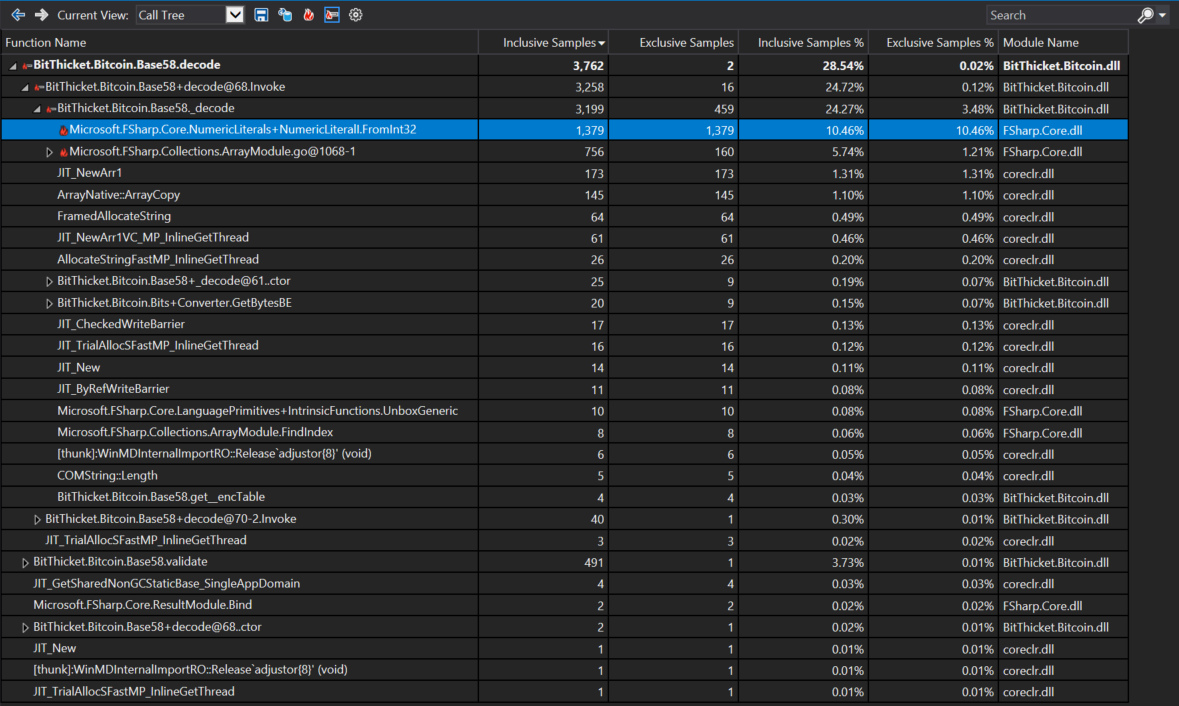

Going back to the profiler, we can look at this with a bit more specificity in the call tree view.

From this, we can then see that using a BigInteger literal in a hot path actually

imposes a non-trivial cost that we can easily avoid by binding the value 58I to a module variable.

module internal Base58 =

open System

open System.IO

open System.Text

open BitThicket.Bitcoin

type Base58String = Base58String of string

let _58I = 58I

let private _encTable =

// ...And we can simply replace our use of the literal with this module variable:

let rec private _decode (s:string) (acc:bigint) =

if s.Length = 0 then Bits.Converter.GetBytesBE(acc)

else Array.findIndex (fun c -> c = s.[0]) _encTable

|> (fun idx -> _58I * acc + bigint idx)

|> _decode (s.Substring(1))Expanding the BitThicket.Bitcoin.Base58+decode@70-2.Invoke node in the call tree reveals

that another similar function shows up:

Microsoft.FSharp.Core.NumericLiterals+NumericLiteral1.FromZero. Unfortunately I was

careless when taking the screenshot and the columns aren’t wide enough to spot this

easily, but here it is:

I just wanted to point this out because it’s there, but I’m actually didn’t do anything about this for two reasons.

- It has so very little impact on the cost of the

decodefunction - The

FromZeromethod actually gets optimized out in release mode.

Another place we wind up calling the FromInt32 function is another spot on the same line

in the _decode function: bigint idx. This casting operation winds up calling

FromInt32.

We use the decode table to look up indexes and then convert those to BigInteger for use

in the computation, so we’ll need to create another lookup table to avoid those allocations.

After our changes, we’ll have the following:

module internal Base58 =

open System

open System.IO

open System.Text

open BitThicket.Bitcoin

type Base58String = Base58String of string

let _58I = 58I

let private _encTable =

[| '1'; '2'; '3'; '4'; '5'; '6'; '7'; '8'; '9'; 'A'; // 0-9

'B'; 'C'; 'D'; 'E'; 'F'; 'G'; 'H'; 'J'; 'K'; 'L'; // 10-19

'M'; 'N'; 'P'; 'Q'; 'R'; 'S'; 'T'; 'U'; 'V'; 'W'; // 20-29

'X'; 'Y'; 'Z'; 'a'; 'b'; 'c'; 'd'; 'e'; 'f'; 'g'; // 30-39

'h'; 'i'; 'j'; 'k'; 'm'; 'n'; 'o'; 'p'; 'q'; 'r'; // 40-49

's'; 't'; 'u'; 'v'; 'w'; 'x'; 'y'; 'z' |] // 50-57

let private _decTable =

['1',0I; '2',1I; '3',2I; '4',3I; '5',4I; '6',5I; '7',6I;

'8',7I; '9',8I; 'A',9I; 'B',10I; 'C',11I; 'D',12I; 'E',13I;

'F',14I; 'G',15I; 'H',16I; 'J',17I; 'K',18I; 'L',19I; 'M',20I;

'N',21I; 'P',22I; 'Q',23I; 'R',24I; 'S',25I; 'T',26I; 'U',27I;

'V',28I; 'W',29I; 'X',30I; 'Y',31I; 'Z',32I; 'a',33I; 'b',34I;

'c',35I; 'd',36I; 'e',37I; 'f',38I; 'g',39I; 'h',40I; 'i',41I;

'j',42I; 'k',43I; 'm',44I; 'n',45I; 'o',46I; 'p',47I; 'q',48I;

'r',49I; 's',50I; 't',51I; 't',52I; 'u',53I; 'v',54I; 'w',55I;

'x',56I; 'y',57I; 'z',58I]

|> Map.ofList

// ...

let rec private _decode (s:string) (acc:bigint) =

if s.Length = 0 then Bits.Converter.GetBytesBE(acc)

else _decTable.[s.[0]]

|> (fun idx -> _58I * acc + idx)

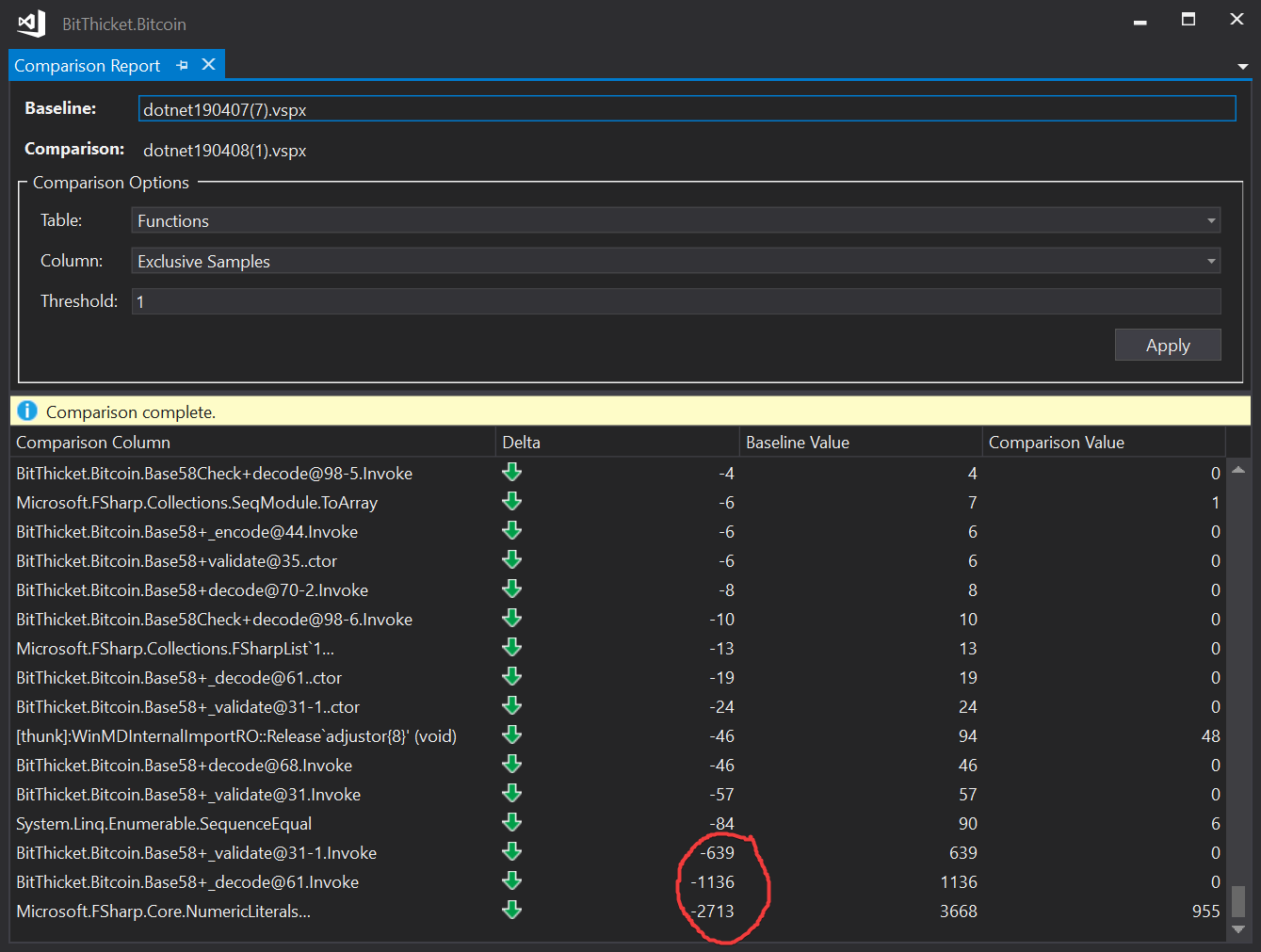

|> _decode (s.Substring(1))Now the function is allocating far fewer BigInteger objects, and the results show that we’ve

measurably improved the performance of the _decode function (and be extension, the

decode function).

There are more things we could do. For example, there’s a call to String.Substring in

the recursive _decode that wastefully allocates byte arrays. But for today, we’ll

take the win and move on.